|

Aneesh Muppidi I'm currently a Rhodes Scholar at Oxford University, co-advised by Jakob Foerster (Oxford FLAIR) and João Henriques (Oxford VGG). Email / Scholar / Follow on X / Github ▶ harvardPreviously, I completed my concurrent masters/undergrad (AB/SM) at Harvard in CS and Neuroscience. I was advised by Prof. Hank Yang at the Computational Robotics Lab, Prof. Samuel Gershman at Harvard's Kempner AI institute where I was a KURE fellow, and Ila Fiete at MIT. I was the President of the Harvard Computational Neuroscience Undergraduate Society and Harvard Dharma. I wrote for the Harvard Crimson. I also like film photography. ▶ questions

How can agents learn in unknown worlds? (read my SOP) |

|

▶ harvardPreviously, I completed my concurrent masters/undergrad (AB/SM) at Harvard in CS and Neuroscience. I was advised by Prof. Hank Yang at the Computational Robotics Lab, Prof. Samuel Gershman at Harvard's Kempner AI institute where I was a KURE fellow, and Ila Fiete at MIT. I was the President of the Harvard Computational Neuroscience Undergraduate Society and Harvard Dharma. I wrote for the Harvard Crimson. I also like film photography. ▶ questions

How can agents learn in unknown worlds? (read my SOP) |

|

Recent News

|

Open Source

|

Research |

|

|

Predictive Scheduling for Efficient Inference-Time Reasoning in Large Language Models

Katrina Brown*, Aneesh Muppidi*, Rana Shahout * equal contribution ICML ES-FoMo, 2025 project page / code / arXiv Train lightweight predictors to estimate reasoning requirements before generation, then greedily allocate tokens where they matter most. |

|

Parameter-free Optimization for Reinforcement Learning

Aneesh Muppidi, Zhiyu Zhang, Hank Yang NeurIPS, 2024 project page / code / arXiv Mitigate plasticity loss, accelerate forward transfer, and avoid policy collapse with just one line of code. |

|

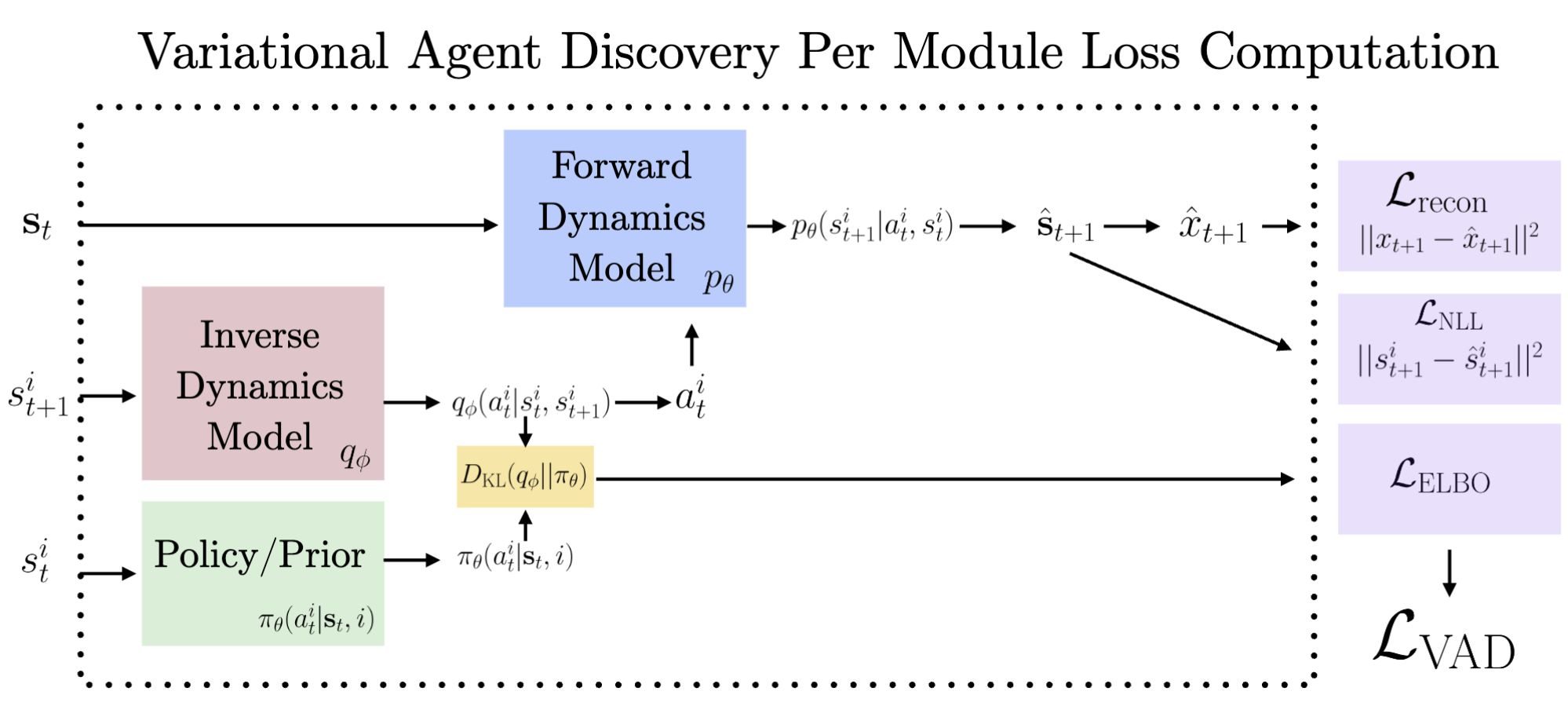

Variational Agent Discovery

Aneesh Muppidi, Wilka Carvalho, Samuel Gershman How can we discover agents using only vision? |

|

Permutation Invariant Learning with High-Dimensional Particle Filters

Akhilan Boopathy*, Aneesh Muppidi*, Peggy Yang, Abhiram Iyer, William Yue, Ila Fiete * equal contribution arXiv, 2024 project page / code / arXiv What is the optimal order of training data? Particle filters can be invariant to training data permutations, mitigiating plasticity loss and catastrophic forgetting. |

|

Resampling-free Particle Filters in High-dimensions

Akhilan Boopathy, Aneesh Muppidi, Peggy Yang, Abhiram Iyer, William Yue, Ila Fiete ICRA, 2024 arXiv Particle filters for Pose Estimation. |

|

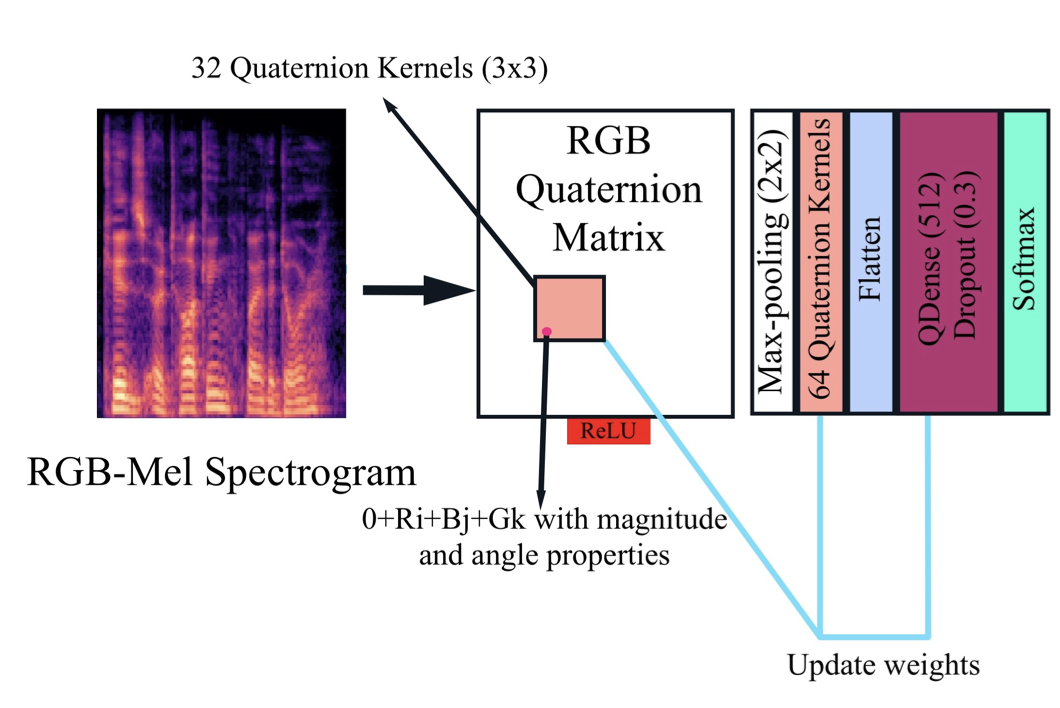

Speech Emotion Recognition using Quaternion Convolutional Neural Networks

Aneesh Muppidi, Martin Radfar ICASSP, 2021 arXiv QCNNs beat SOTA SER models. |

Blog |

|

|

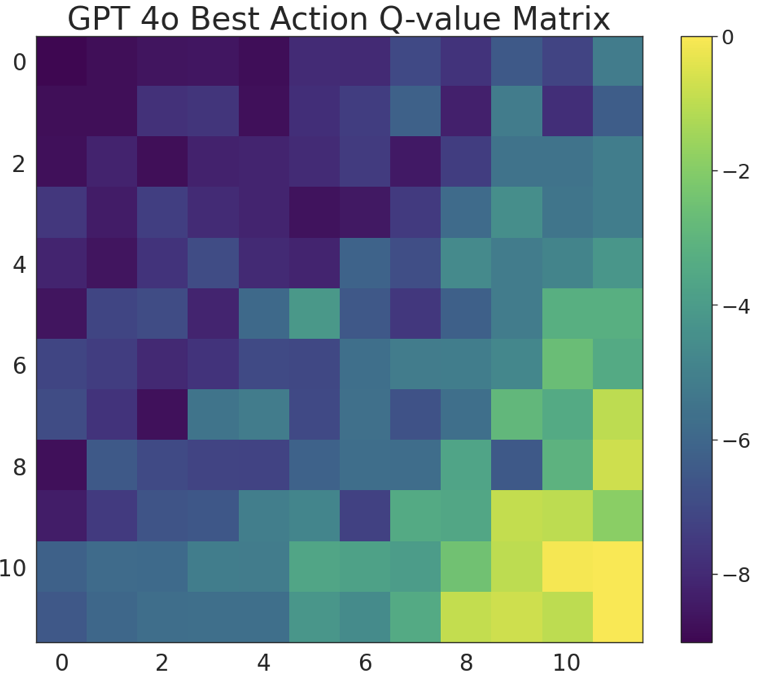

LLMs as Value-Function Approximators

December 2024 read post Bootstrapping temporal-difference learning with LLM priors for accelerated Q-learning convergence. Exploring zero-shot value approximation in grid-worlds and high-dimensional RL environments. |

Favorites |

||

|

|

|

|

|

|

|

A big thanks to the kind Jon Barron. |